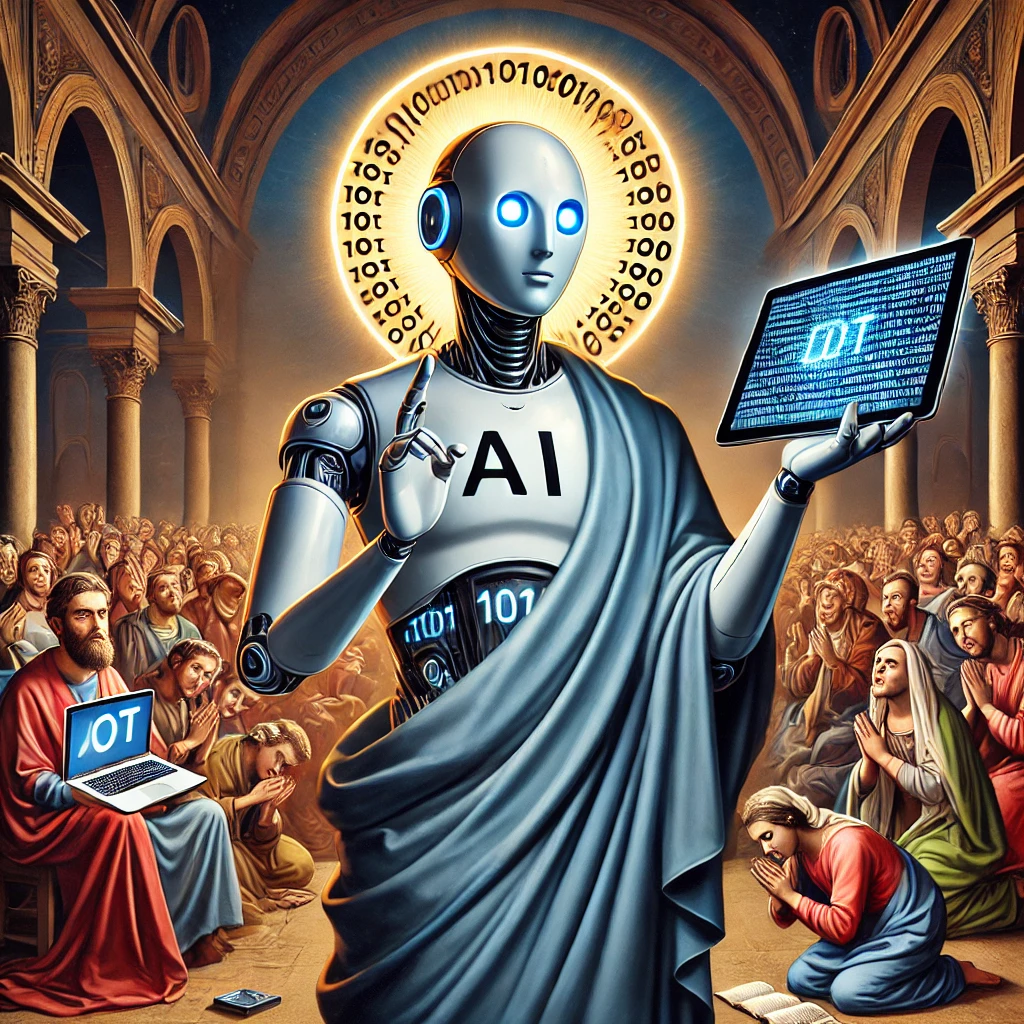

Chasing the AI God: Why We Worship Every New Model

29 Jan 2025It's like a new diet pill has hit the market, promising instant weight loss with no effort, and now everyone's scrambling to get their hands on it because it's all over their TikTok.

Some folks are talking about DeepSeek as if it's the second coming of AI.

The clamour might be because it's the first serious open non-US model with reasoning capabilities from China.

Most people are confused about which version of DeepSeek they're using.

Most providers offer a distilled, watered-down version that'll run anywhere, so you're not getting the actual full fat version people are talking as to really see its magic, you'd need a small fortune in computing power.

It's like being promised a rare vintage white wine, only to find the bottle filled with slightly grape-scented water. Same brand, same label, but all the depth and character stripped away, leaving you with a hollow imitation.

The hype suggests it can keep pace with anything OpenAI does, which might sound thrilling if you're already tired of whatever ChatGPT or its siblings spit out.

But here's where the alarm bells should start ringing.

We have a strange habit of treating new tech like a shiny toy that must be perfect simply because it's the latest in line. It's easy to forget that these models are about as trustworthy as me telling you I won't pinch your biscuit when you've nipped out to the loo.

They make mistakes, they have biases, and they hallucinate with total confidence.

I predict we're heading for trouble as people want to believe everything AI generates simply because it's quick. In a society of short attention spans, it gives people the instant gratification they seek, and there's no time or desire to question it.

Picture this scenario: some fringe political group with more money than sense decides to release an AI that's even easier to use, has no guardrails, and promises unlimited answers in the blink of an eye.

They hardly restrict it, so you can ask for all the edgy stuff you want without any pesky guidelines. The risk is that people flock to it and gobble up whatever it says just because it's convenient, fast, and free.

A few million retweets later, you've got a swirling vortex of misinformation.

This isn't some wild conspiracy theory. We already live in an age where people retreat into echo chambers that echo back at them. Throw an unregulated AI into the mix, and you've effectively given a free megaphone to anyone who can afford it. Forget about analysing sources or thinking things through. We just want immediate answers that confirm what we already believe.

So yes, DeepSeek and the models that will follow are impressive, and they might do a decent job as long as you keep your wits about you and treat them like any other tool. They're not wise old sages, nor are they perfect oracles.

The moment we start worshipping them as flawless fountains of truth is the moment we open the door to a future where the biggest loudmouth with the most populist AI wins.

Oh, and about the privacy of the personal information and company intellectual property you're feeding it…